Artificial Intelligence

Artificial intelligence is the intelligence that machines can exhibit. It differs, therefore, from natural intelligence, which is possessed by human beings. It is considered that research in AI properly began in 1956, following a conference held in 1956 at Dartmouth University.

Research in artificial intelligence has experienced many ups and downs due to investments, expectations surrounding this technology, and the development of the necessary computational capacity for research. There was a period, known as the 'winter of artificial intelligence,' between 1974 and 1980 when funding disappeared, and there were no advances in research.

Between 1980 and the late 1990s, the sector experienced various fluctuations in popularity accompanied by the corresponding appearance and disappearance of investment, which were resolved by achieving sufficient computing capacity.

A Bit of History

Since its beginnings, research in artificial intelligence has gone through countless ups and downs. Between 1956 and 1974, it enjoyed a golden age when scientists predicted that a computer with cognitive capacity equal to that of a human being would be achieved in a short time, leading to million-dollar investments in research. However, these estimates turned out to be incorrect, and expectations were not met, leading to the disappearance of investments. This period, between 1974 and 1980, is known as the 'winter of artificial intelligence.' In addition to financial problems, projects also faced limited computational and data storage capacity, which hindered the necessary processes and experiments.

From 1980 until the late 1990s, the field of artificial intelligence experienced fluctuations in its popularity, accompanied by the corresponding appearance and disappearance of investments. By the end of the 1990s, computers began to have enough capacity to make advances in the field. In fact, the computer used to play chess in 1997 was 10 million times more powerful than the one used for the same purpose in 1951.

A Change in Perspective

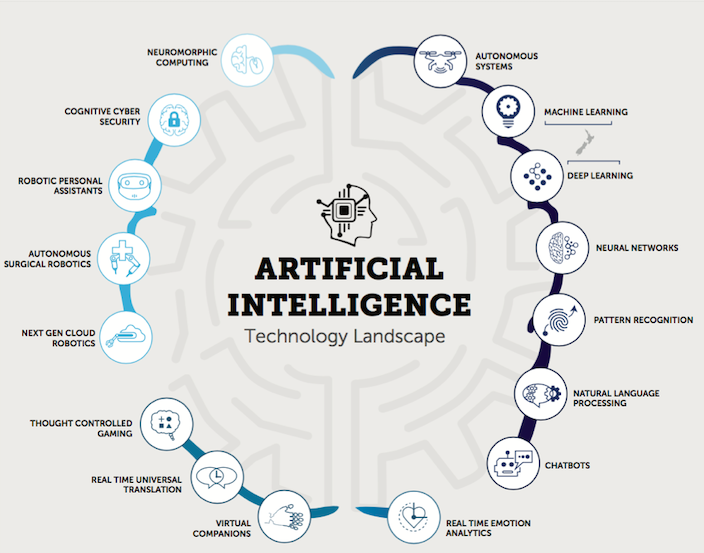

Since then, the perception of artificial intelligence has undergone a radical change. The power of computers and the availability of large amounts of data have enabled a series of significant advances, albeit in a different direction from the one pursued previously. Instead, progress has been made in the field of deep learning, neural networks, and machine learning, all of which are branches of artificial intelligence. Additionally, there are other areas of research such as predictive analytics, natural language recognition, and facial recognition.

The predominant fields within artificial intelligence now include deep learning, machine learning, neural networks, and bots. All of these are branches of artificial intelligence. Furthermore, there are other branches or sub-branches, such as predictive analytics, natural language recognition, and facial recognition.

Predictive Analytics

Predictive analysis is a technique that is part of machine learning. Its goal is to use predictive models to identify patterns in historical and transactional data to predict future risks and opportunities. This allows organizations to prepare in advance. For example, in the context of a production chain, predictive analysis could foresee a machine failure, enabling the problem to be resolved before it causes a complete breakdown and thus avoiding or reducing production interruptions.

Netflix is a company that uses predictive analysis to improve its services, especially in its recommendation engine. Around 80% of Netflix users consume content recommended by the platform, which has contributed to reducing the service cancellation rate. Additionally, Netflix uses data on viewing behavior, such as the time of day and the amount of content watched, to enhance its recommendations.

Natural Language Understanding (NLU)

Natural Language Understanding (NLU) is a discipline within Natural Language Processing (NLP). It is considered one of the most complex problems in artificial intelligence, known as "AI-hard" problems. NLU is gaining popularity due to its application in large-scale content analysis, whether in the form of structured or unstructured data and in large volumes.

An example of the use of this technology is virtual assistants like Alexa, Siri, or Google Assistant. For instance, Siri, Apple's assistant, can recognize commands through the training of its neural network. The system uses probability calculations to detect if the recorded audio signal matches the phrase "Hey, Siri," comparing it to the original model. When a certain threshold is reached, the system activates and responds to the user.

Machine Learning

One of the fields of study that has recently received interest is machine learning, where the ability of a machine to learn and act without being explicitly programmed for it is studied. The term is highly popular in the field of artificial intelligence-related technologies. It involves the use of algorithms that allow machines to learn by imitating the way humans learn.

Machine learning, also known as machine learning, is now one of the booming technologies in the business world. This form of artificial intelligence offers several significant advantages to businesses, such as its analytical capabilities, which provide a valuable and autonomous source of information.

Predictive analysis offers immeasurable value to businesses, allowing them to predict market trends, make data-driven predictions, minimize risks, address problems before they occur, and make informed decisions.

In addition to prediction, machine learning algorithms are commonly used by companies to reduce errors in operational and management systems, enhance data security, strengthen the analytical capabilities of data analysis tools, and automate processes.

According to recent research, machine learning is among the most in-demand professions, and a recent study by Algorithmia has shown a significant increase in resources allocated to this technology in the business sector.

What is Machine Learning and How Does It Work?

Machine learning is a discipline within artificial intelligence that allows machines to learn in a manner similar to humans, using mathematical algorithms. Thanks to this, machines can perform complex analyses without the need for specific programming.

The goal of this technology is to provide machines with advanced analytical capabilities, enabling them to solve problems without human intervention, through identification, classification, and prediction.

The concept of machine learning may seem like something out of science fiction, but it is already present in our current world.

Machine learning algorithms have the ability to identify patterns in large amounts of data, and with that information, they can draw conclusions and conduct analyses without needing to be explicitly programmed.

Machine learning is based on statistics and mathematics, and its aim is to provide automatic and intelligent solutions to complex problems by identifying and classifying patterns.

While at its core, machine learning is a mathematical technology, its applications are endless and are used in systems for predictive analysis, generating automatic responses, and in a wide range of other fields.

In essence, machine learning is a technology that allows machines to learn on their own and provides a wealth of valuable information through data analysis, and its impact is becoming increasingly significant in the business world.

Machine learning is a technology with a multitude of practical applications. Although it may seem futuristic, it is actually part of our everyday life.

Video and music streaming platforms like Netflix and Spotify use machine learning algorithms to provide personalized recommendations to their users. Virtual assistants like Alexa and Siri, which respond to human questions, are also clear examples of how machine learning is used. Furthermore, this technology is employed to improve search results on engines like Google, for the operation of robots and autonomous vehicles, for disease prevention, and for creating antivirus software that detects malicious software.

In the business world, machine learning has become a crucial technology, especially due to its predictive capabilities.

Predictive analysis is a valuable skill for businesses, as it allows them to anticipate market trends, make data-driven predictions, reduce risks, address problems before they occur, and make more informed decisions.

In addition to predictive analysis, companies often use machine learning algorithms to reduce errors in operating and management systems, strengthen data security, improve the analytical capabilities of data analysis tools, and automate processes.

Types of Machine Learning: Supervised and Unsupervised Algorithms

As artificial intelligence has evolved and machine learning has become more prominent, various branches and types of algorithms within machine learning have emerged.

Machine learning is mainly divided into two models: supervised machine learning and unsupervised machine learning.

Additionally, there is a debate about whether deep learning is a subcategory of machine learning. Deep learning has advanced to the point where some consider it as an independent field of study.

On the other hand, the nature of machine learning projects can vary depending on how algorithms are applied or utilized. Through the necessary programming and coding, these algorithms can be adapted to virtually any process or operation.

Despite the growing excitement around machine learning, organizations must understand that its potential is directly linked to the quality and performance of the programmed algorithms. Much of what machine learning does involves statistical data analysis, and just like in any statistical analysis, the results depend on the logic applied in the analysis and, in this case, the work of programmers and developers, as well as the business logic implemented in the machine learning project.

Supervised Machine Learning vs Unsupervised Machine Learning

Supervised machine learning refers to machine learning algorithms that "learn" from labeled data provided by humans. In this case:

1. Human intervention is required to label, classify, and input data into the algorithm. The algorithm generates expected output data since the input data has been previously labeled and classified by humans. There are two types of data that can be input into the algorithm:

2. Classification: Used to classify objects into different categories. For example, determining whether a patient is sick or if an email is spam.

- Regression: Used to predict a numerical value, such as house prices based on different characteristics or hotel occupancy demand. Some practical applications of supervised learning include:

- Predicting insurance claims costs for insurance companies.

- Detecting bank fraud by financial institutions.

- Forecasting machinery failures in a company.

In contrast to supervised learning, unsupervised machine learning involves no direct human intervention. Algorithms learn from unlabeled data and seek patterns or relationships among them. In this mode:

Unlabeled input data is used. No human intervention is required to classify the data. There are two types of algorithms used in unsupervised learning:

1. Clustering: Used to group output data into different clusters. For example, segmenting customers based on their purchasing patterns.

2. Association: Used to discover rules within a dataset. For example, identifying that customers who purchase a car also purchase insurance.

Unsupervised learning finds applications in various areas such as:

- Customer segmentation in a bank.

- Patient classification in a hospital.

- Content recommendation systems based on user consumption history on video streaming platforms.

Supervised Algorithms

Supervised algorithms are those that can work with new data based on what they have learned in the past.

Within supervised machine learning algorithms, we find two types: classification algorithms and regression algorithms.

Unsupervised Algorithms

Unsupervised machine learning algorithms are capable of drawing conclusions from datasets without being previously trained.

When working with unsupervised machine learning algorithms, data is distributed into different places, and no datasets are provided. Nowadays, there are many types of machine learning algorithms that attempt to discover correlations without any external input, using only the raw data available.

Reinforcement Learning Algorithms

Reinforcement learning allows automatic agents and software to automatically define ideal behavior within a specific context, taking into account feedback from their surroundings.

To obtain information about behavior, the machine only needs simple feedback, known as the reinforcement signal. This behavior can be learned once and continues to adjust and improve over time.

Reinforcement learning algorithms are designed to acquire knowledge from previous experience. In other words, these algorithms can address problems based on the results obtained in similar situations they have previously experienced.

Reinforcement Learning works by subjecting the machine to a series of challenges in which it must make a decision. If the decision is correct, the system receives a reward. After several challenges, the algorithm is capable of making correct decisions on its own, without the need for human intervention.

Classification and clustering

Classification and clustering are two methods of pattern identification used in machine learning. Although both techniques have certain similarities, the difference lies in the fact that classification relies on predefined classes to assign objects, while clustering identifies similarities among objects, grouping them based on these common characteristics that differentiate them from other groups of objects. These groups are known as "clusters."

In the field of machine learning, clustering falls under unsupervised learning; in other words, for these types of algorithms, we only have input data (unlabeled) from which we need to gather information without knowing the expected output in advance.

Clustering is used in projects for companies looking to find commonalities among their customers in order to identify groups and tailor products or services. If a significant percentage of customers share certain characteristics (age, family type, etc.), it can justify a particular campaign, service, or product.

On the other hand, classification belongs to supervised learning. This means that we have knowledge of the input data (labeled in this case) and the possible outputs of the algorithm. There is binary classification, which provides solutions to problems with categorical responses (such as "yes" and "no," for example), and multiclass classification, for problems with more than two classes, offering more open-ended responses, such as "excellent," "average," and "poor."

Classification is used in various fields, such as biology, Dewey Decimal classification for books, and email spam detection, among others.

At Bismart, we use both classification and clustering in our projects, which span various sectors. For instance, in the social services sector, we have employed clustering to identify groups of the population that use specific social services. Using data from social services, we have been able to cluster groups of people who use similar services based on their characteristics (number of dependents, degree of dependency, marital status, etc.). This has allowed us to anticipate the type of service a new social service user will need by comparing their attributes to those of existing clusters.

Classification is used when there is a need to identify users or customers to make decisions about future product launches or campaigns. For example, at Bismart, we carried out a project for the insurance sector in which the client needed to classify customers based on their likelihood of making claims, enabling the classification of insurance policies based on the predicted number of accidents. This allows the company to select customers with a lower number of claims and exclude those with a high number.

Machine Learning Projects

El proceso de un proyecto de machine learning consta de tres etapas clave.

The process of a machine learning project consists of three key stages.

Data Acquisition: Data can be obtained from various sources, including the web, databases, audio-to-text transcriptions, etc.

Analysis with Algorithms: A human team collects and analyzes the data before passing it through an algorithm that extracts relevant information.

Decision Making: The algorithm provides a result that is used as a basis for business decision-making in accordance with company guidelines.

Before starting a machine learning project, it is important to consider whether the problem requires artificial intelligence. This implies the existence of a significant amount of relevant data. It is crucial to avoid basing a project on insufficient or low-quality data, as this effort would result in a waste of time.

It's important to note that machine learning algorithms identify patterns in data but do not reason. Therefore, they should be used as a solid foundation for decision-making.

Although machine learning algorithms can learn on their own, human supervision is always required. The machine can process graphics, numbers, etc., but it always needs human interpretation to provide value and logic to the results from a business perspective.

When should machine learning be used to solve a problem?

-

When writing logical software is difficult and requires a significant amount of time and resources.

-

When there are large amounts of data available.

-

When the problem fits the structure of machine learning. A machine learning problem should have a clearly defined target variable, such as customer classification or accident prediction. The sufficiency and adequacy of available data should also be assessed to determine if they are suitable for predicting the desired outcome.

Using machine learning requires careful consideration of expectations. In most cases, algorithm results serve as a tool for decision-making and subsequent actions but do not always directly translate into solutions. However, there are exceptions like Netflix, where results are presented as recommendations on the platform without human intervention. In these cases, it's essential to evaluate the impact of potential errors in the results. For example, an error in recommending a TV series is different from an error in predicting a traffic accident.

Machine learning may not be effective at identifying random events since it relies on pattern recognition. Therefore, when faced with a random event, the algorithm may not know how to react as there is no pattern to associate it with. Hence, if the problem to be solved includes many chance occurrences, other solution methods may need to be considered.

Even if the algorithm performs well and provides an effective solution, sometimes the results can be challenging to interpret. Some algorithms, like decision trees, are easier to understand and allow you to see which variables are most relevant, but others may provide complex and hard-to-interpret results even if they are accurate for the objective. The high complexity of the reasons behind the algorithm's results can make it difficult for a human to understand why a particular conclusion was reached.

Machine learning is not the best choice if you don't have enough labeled data to train the algorithm. Labeled data enables the algorithm to learn patterns and provide accurate predictions.

Furthermore, a machine learning project can be slow and require a high tolerance for errors. It's essential to keep in mind that the machine can make mistakes, although the goal is always to minimize the margin of error.

When is machine learning the best solution?

-

When scalability is a challenge, as machine learning excels at working with large amounts of data.

-

When customized results are needed, as machine learning uses our own data to produce specific outcomes for our business

When is machine learning the best solution?

- When scalability is a challenge, as machine learning excels at working with large amounts of data.

- When we need customized results, as machine learning uses our own data to produce specific outcomes for our business.

The best machine learning tools

Next, we will review some of the best machine learning platforms for businesses and users who are not experts in data science. These tools automate the entire machine learning process, from data preparation to model training, evaluation, and implementation in production.

-

Azure Machine Learning

Azure Machine Learning is part of Microsoft's extensive range of Big Data tools and supports both supervised and unsupervised machine learning algorithms, as well as deep learning algorithms.

This machine learning platform is very comprehensive and offers different levels of usability for users with varying skill levels. It is specifically designed to help businesses create value through machine learning and is one of the most efficient and quick options for creating and deploying machine learning models.

Azure ML allows users to code in Python or R, work with machine learning models in other programming languages using the SDK, and also work without the need for coding or with minimal code using Azure ML Studio. Additionally, the tool promotes collaboration, is easily integratable, and allows users to create, train, and monitor machine learning and deep learning models in a straightforward manner.

Azure Machine Learning is compatible with other frameworks like TensorFlow, PyTorch, or Scikit-learn, meaning that models developed in these frameworks can be imported into Azure ML without the need for code changes.

-

Scikit-learn (Python)

Scikit-learn is the most popular machine learning package in Python due to its simplicity and a wide range of use cases. It supports common machine learning algorithms such as decision trees, linear regression, random forests, k-nearest neighbors, support vector machines (SVM), and stochastic gradient descent.

Scikit provides tools for analyzing models, including the confusion matrix, to evaluate the performance of each model.

Scikit-learn is an ideal environment for those who want to get started in the world of machine learning and begin with simple tasks before moving on to more complex options like Azure Machine Learning.

-

IBM Watson

IBM Watson offers a wide range of machine learning products that allow you to easily access data from different sources without sacrificing confidence in the predictions and recommendations generated by your artificial intelligence models.

The brand offers a wide range of AI capabilities focused on enterprise use, not only enabling the creation of machine learning models but also offering a set of tools to accelerate value through pre-built applications.

-

Amazon SageMaker

Amazon SageMaker is a fully managed machine learning service, although it is focused on users with knowledge of data science. The platform enables data scientists and developers to create and train machine learning models quickly and easily, and deploy them directly into production environments.

Additionally, SageMaker provides an integrated Jupyter Notebook instance that makes it easy to access data for exploration and analysis without the need to manage a server. The platform also provides optimized generic machine learning algorithms to run efficiently on large datasets in a distributed environment.

Like Azure Machine Learning, SageMaker natively supports the most popular machine learning and deep learning frameworks.

-

MLflow

MLflow is an open-source platform that manages the entire machine learning lifecycle, including experimentation, deployment, and a central model registry. It can be integrated and used with all machine learning libraries and programming languages

The Difference Between Machine Learning and Deep Learning

In the 21st century, in 2011, a branch of machine learning known as deep learning (DL) emerged. The popularity of machine learning and advances in computing power allowed the rise of this new technology. Although the concept of deep learning is similar to that of machine learning, it uses different algorithms. While machine learning works with regression algorithms or decision trees, deep learning uses neural networks that function similarly to the biological neural connections in our brains. In both cases, having quality and reliable data is crucial to ensure effective operation.

Data consolidation, data integration through an ETL or SSIS process, and data management are essential to guarantee the success of machine learning or deep learning.

Machine Learning refers to mathematical algorithms that enable machines to learn in a similar way to how humans do. However, machine learning is not limited to just algorithms but also encompasses the approach used to address the problem. It is essentially a way to achieve artificial intelligence.

Deep Learning (DL) is a subset of machine learning. In fact, it can be considered the most recent evolution of machine learning. It is an automated algorithm that mimics human perception, inspired by the functioning of our brain and the interconnection between neurons. DL is the technique that comes closest to how humans learn.

Most deep learning methods use a neural network architecture. For this reason, it is often referred to as "deep neural networks." The term "deep" refers to the multiple layers that make up these neural networks.

What is the difference between the two?

In simple terms, both machine learning and deep learning mimic the way the human brain learns. The main difference between machine learning and deep learning lies in the types of algorithms used, as deep learning employs more advanced neural networks and is closer to human learning. Both technologies can learn in a supervised or unsupervised manner.

Machine learning use cases in the business world

In the business world, deep learning offers a wide variety of applications and uses that vary depending on the needs of each sector.

Here are some of the most common uses:

- Image and Video Recognition: Deep learning algorithms have significantly improved the quality and accuracy of text, object, logo, and landmark detection algorithms. Deep learning-based computer vision technology has increased accuracy in facial recognition, visual search, and reverse image search.

- Voice Recognition: Also known as Speech-to-Text (STT) or Automatic Speech Recognition (ASR), this technology converts spoken words into text. ASR is used in many sectors, such as healthcare and the automotive industry.

- Manufacturing: Deep learning algorithms enhance the accuracy of industrial systems and devices. For example, it can be used to send automatic alerts about production issues, power industrial robots and sensors, or analyze complex processes.

- Entertainment: Deep learning algorithms drive a wide range of entertainment systems, including content personalization, streaming, and adding sound to silent movies.

- Retail and e-commerce: Some e-commerce applications already use deep learning to improve the shopping experience and customer experience with voice-activated purchases. Additionally, intelligent robots in stores, future trend predictions, and personalized recommendations are also common uses of deep learning in retail.

- Health: Deep learning is used for computer-aided disease detection and diagnosis, sometimes offering performance superior to human experts. Furthermore, deep learning algorithms help improve medical research and drug discovery.

Deep learning software

The business world has access to six of the best deep learning (AI) applications and software. These include CNTK, TensorFlow, PyTorch, the Cloudinary Video AI API, among others.

CNTK is an open-source tool specifically designed for commercial-grade deep learning. CNTK allows for the easy combination of common models such as CNN, RNN, LSTM, and feed-forward DNN.

TensorFlow is an open-source framework developed by Google for building and executing deep learning and machine learning algorithms. TensorFlow constructs processes using the concept of a computational graph and can run on CPUs, GPUs, and TPUs.

PyTorch is a deep learning and machine learning framework based on Python and Torch. PyTorch is easy to use and supports distributed training. PyTorch allows for model export using ONNX and is highly compatible with open-source platforms.

The Cloudinary Video AI API is an example of a machine learning solution that doesn't require data science knowledge. The API can be integrated into any web application and enables the processing and management of video content handled by AI.

In conclusion, these deep learning applications and software are useful for automating processes, conducting predictive analysis, and managing AI-driven content in the business world.

Graphics Processing Units (GPU)

GPUs are composed of a large number of processing cores working simultaneously. They are specialized in multiple computation and simultaneous execution of operations, which makes them an extremely efficient tool for deep learning algorithms. In addition, they offer a wide memory bandwidth, typically 10 to 15 times larger than a conventional CPU.

Field Programmable Gate Arrays (FPGAs)

FPGAs are integrated circuits in which the internal network can be reprogrammed depending on the task to be performed. They are an attractive alternative to ASICs, which require a lengthy design and manufacturing process. FPGAs can offer better performance than GPUs, just as an ASIC designed for a specific purpose will always outperform a general-purpose processor.

High Performance Computing (HPC)

HPC systems are highly distributed computing environments that use thousands of machines to achieve massive processing power. They require high component density and special power and cooling requirements. Deep learning algorithms that require high computational power can take advantage of HPC hardware or HPC services offered by cloud providers such as AWS and Azure.

Artificial Intelligence Use Cases: Health and medicine

At Bismart, we organized an event that covered the scope of technology in clinical management. During the event, various perspectives were shared on the application of technology in clinical management. On one hand, the potential of artificial intelligence to change the functioning of social services was explained through a success case: the pilot test of a predictive model to optimize social service benefits, taking into account population segmentation, the social services catalog, social services archives, budgets, cost per service, etc. In this way, the administration can ensure that resources are allocated to those who need them most, even if they don't request them.

On the other hand, José Manuel Simarro from the Spanish System of Pharmacovigilance of Human Medicines focused on the application of technology for analysis and research. Big Data and Artificial Intelligence accelerate data processing and, therefore, reduce the time required for research.

To conclude, Josep M. Picas pointed out that 40% of diagnoses are incorrect. If the same doctors who fail 40% of the time are teaching in universities and responsible for transferring medical knowledge, it is very likely that the new generations of healthcare professionals will make the same mistakes. Artificial intelligence in this field becomes a support tool that can improve diagnoses. Now, several doctors may have disparate views on the same case. However, with artificial intelligence, the machine will always provide a 100% correct diagnosis objectively.

Thus, Artificial Intelligence has come to stay, not so much as a tool to replace what we already have but to enhance it and ensure its efficiency. One way to achieve this is through folksonomy.

Artificial Intelligence in Improving Care for the Elderly

The aging population means there is an increase in the proportion of people over 65 without a corresponding growth in the working-age population, which is responsible for sustaining the pension system.

This phenomenon presents various challenges to society. On one hand, there are economic problems, such as the need to finance the pension system and healthcare and social care costs for the elderly. On the other hand, there are direct problems affecting the elderly, such as chronic diseases, comorbidities, loneliness, and loss of physical and mental capabilities. These problems worsen with age, and even though we are living longer, there is no guarantee that these years will be of good quality.

This issue is already a concern today, but according to the WHO, the number of elderly people (over 60 years old) is expected to double by 2050 and triple by 2100. Globally, the population aged 60 and over is growing faster than any other age group. Therefore, it is crucial to start taking measures and policies to improve the quality of life for the elderly and prevent a negative impact on the economy. Elderly people could participate in paid or unpaid work, but in both cases, they would receive some form of compensation. It has been shown that people who work after retirement age develop physical and mental deterioration symptoms much later than those who retire earlier. This could also reduce healthcare and social care spending in this age group.

In this regard, artificial intelligence can help alleviate the effects of an aging population.

It is evident that implementing such a system is not easy. In the short term, it will involve costs, and not all elderly people can or want to work.

Currently Implemented Systems

Municipalities are taking steps to improve care for the elderly. For example, the Barcelona City Council has implemented two important projects to improve the lives of elderly people.

The first is the MIMAL project, which offers a teleassistance device for people with cognitive impairments. This device allows caregivers to locate the elderly person at any time through geolocation, increasing their safety and autonomy.

The second project is Vincles BCN. This social innovation project aims to improve the well-being of elderly people who feel lonely and strengthen their social relationships through the use of technology. Elderly people are provided with a device and an application that allows them to communicate with their family and others, stay informed about community activities, and more.

Additionally, Bismart organized the event "The Power of Machine Learning" in Barcelona and Madrid, where over 100 leaders from public and private organizations could learn about the potential of tools like Machine Learning, Big Data, Stream Analytics, and Power BI.

During the event, Bismart experts presented real cases that demonstrated the ability to predict crime using cutting-edge technologies. Specifically, they showed how, through predictive analysis and the use of Azure Machine Learning, they could predict crime occurrences in districts of the city of Chicago.

The approach is based on the analysis of historical crime data, combined with correlated variables such as lunar phases, weather, lighting, socio-demographic data, and socio-economic data, among others. These data are used to train models in virtual machines, applying algorithms that learn from past mistakes and new trends. Additionally, real-time data can be added through Stream Analytics to obtain updated results on the probability of crimes in different areas and times.

This example shows how the application of Machine Learning and predictive analysis can contribute to building safer cities. Furthermore, tools like Stream Analytics and Social Media Analytics can also be useful for detecting cyber terrorist attacks.

Currently, these technologies, such as Machine Learning, Azure Stream Analytics, and Big Data, are more accessible to companies of all sizes through Microsoft's Azure cloud platform. These tools enable predicting consumer behavior, discovering market trends, analyzing prices, and detecting fraud and crimes. Finally, event attendees were invited to challenge Bismart to develop pilot projects using predictive analysis, Machine Learning, or Stream Analytics with their own data.

Artificial intelligence applications in other sectors

The implementation of artificial intelligence in companies encompasses a wide range of applications, which sometimes makes it difficult to grasp its scope due to its abstract nature. Therefore, we consider some examples of how companies from different sectors are using artificial intelligence in their operations.

Applications of Artificial Intelligence in the Entertainment Sector: The entertainment industry has witnessed numerous advancements thanks to Artificial Intelligence (AI). Some notable applications include facial recognition, virtual and augmented reality for creating immersive experiences, and motion tracking, among others.

One renowned example of AI in the entertainment industry is Netflix's recommendation algorithm. This leading streaming platform not only offers an extensive catalog but also possesses one of the most effective recommendation algorithms. By utilizing machine learning techniques, Netflix provides personalized recommendations that generate an annual value of $1 trillion in customer retention. Moreover, it is estimated that 80% of the platform's views come from these recommendations.

Artificial Intelligence in the Automotive Sector: The automotive industry is widely recognized for its extensive use of Artificial Intelligence. Not only has it been a pioneer in its application, but AI has become a fundamental element for companies in this sector.

A clear example of AI in the automotive sector is the integration of robots in assembly lines. It is now commonplace to see robots performing tasks that were previously carried out by humans, such as molding aluminum blocks into vehicles, painting cars, or assembling parts.

In addition to the assembly line, AI has had a significant impact on the development of assisted driving. AI algorithms are used to recognize objects, and this is evident in functions such as parking assistance, where modern vehicles can detect proximity to objects and emit alerts. In the future, assisted driving is expected to expand further to enhance road safety and reduce traffic accidents.

Artificial Intelligence in the Manufacturing Industry: Artificial Intelligence has made significant advancements in the manufacturing sector by simplifying and improving the accuracy of operations. It allows companies to program inventory more efficiently, detect and prevent errors, resolve problems more quickly, and overall optimize the entire manufacturing process.

Artificial Intelligence in Marketing: Marketing is one of the business areas that will experience significant evolution thanks to Artificial Intelligence in the near future. Currently, it is commonly used to automate content generation and optimize customer data collection. However, one of the most promising applications is content personalization, recommendations, and experiences.

Marketing plays a crucial role in direct interaction with customers. In this regard, AI plays a strategic role as marketing, especially digital marketing, moves towards communication and experiences tailored to consumer preferences.

It is evident that Artificial Intelligence has transformed various business areas in many aspects. Although there were initial concerns about its impact on human intelligence, it has been proven over time that AI brings significant benefits to both businesses and other aspects of our lives.

Text Analytics to fight enterprise data waste

Most enterprise data is not fully exploited, which is a significant problem. Approximately 95% of useful data remains unexploited.

It's astonishing to think that companies choose to utilize only 5% of the available information. However, the real problem lies in the inability to access the remaining 95%.

The main reason for this is that business data lacks proper structure, which means it's disorganized. When data is unstructured, it becomes extremely difficult to find the information needed. Fortunately, there is a solution to this problem: text analytics solutions, specifically intelligent folksonomy.

With the exponential growth of information to process, text analysis systems have become increasingly relevant. Nowadays, companies and organizations need to be able to process data beyond traditional formats, including text written in everyday language that we humans use to communicate.

So, what is text analytics?

Text analytics technologies are those that have the ability to process data in an unstructured format, specifically in the form of written text. These text analysis systems are capable of extracting high-quality information from any type of text.

These technologies are part of artificial intelligence and use algorithms that can identify patterns in unstructured texts. This capability is extremely relevant because it is estimated that 80% of the information relevant to organizations is found in unstructured data, mostly in the form of text.

The good news is that there are numerous text analysis systems available today. However, not all of them have the same capabilities or are suitable for the same purposes. Therefore, it is essential to understand how these technologies work and their differences.

In general terms, text analysis systems are based on one of two methods: taxonomy and folksonomy. The main difference between the two lies in the fact that taxonomy requires prior organization of information using predefined tags to classify content, while folksonomy is based on natural language tagging.

It is important to take these differences into account when selecting a text analysis system, as each approach has its own advantages and challenges. By understanding the capabilities and features of these systems, companies can make the most of valuable information hidden in unstructured data, thereby improving their decision-making and gaining meaningful insights for their growth and success.

The best Text Analytics systems

There are several tools and software available to perform text analysis. Some of these tools are presented below:

SAS: SAS is a software used to extract valuable insights from unstructured data, such as online content, books, or feedback forms. In addition to guiding the machine learning process, it can automatically reduce generated topics and rules, allowing for tracking changes over time and improving results.

QDA Miner's WordStat: QDA Miner is a tool that enables the analysis of qualitative data. It utilizes the WordStat module for text analysis, content analysis, sentiment analysis, and business intelligence. It provides visualization tools to interpret the results, and its correspondence analysis helps identify concepts and categories in the text.

Microsoft's Cognitive Services Suite: This suite offers a set of artificial intelligence tools that facilitate the creation of intelligent applications with natural and contextual interaction. While not exclusively a text analysis program, it incorporates elements of text analysis in its ability to analyze speeches and language.

Rocket Enterprise's Search and Text Analytics: This tool provides security and ease of use for text analysis. It is particularly useful for teams with limited technological expertise, as it allows for quick information retrieval in large amounts of data.

Voyant Tools: Voyant Tools is a popular text analysis application among digital humanities scholars. It provides a user-friendly interface and the ability to perform various analysis tasks, such as visualizing data in a text.

Watson Natural Language Understanding: Watson, developed by IBM, offers the text analysis system called Watson Natural Language Understanding. It utilizes cognitive technology to analyze text, including sentiment and emotion evaluation.

Open Calais: Open Calais is a cloud-based tool that helps label content. Its strength lies in recognizing relationships between different entities in unstructured data and consequent organization. While it cannot analyze complex sentiments, it can help manage unstructured data and convert it into a well-organized knowledge base.

Folksonomy Text Analytics: Bismart's Folksonomy software utilizes intelligent tags based on generative artificial intelligence (IAG) and Large Language Model (LLM) machine learning models to filter unstructured data files and locate specific information. This approach eliminates the need to manually define tags and categories, allowing for real-time adaptation to different uses. It is user-friendly and fast, making it perfect for collaborative projects.

These tools offer various capabilities and approaches to text analysis, so it is important to assess specific needs and select the one that best suits each case. Folksonomy, the smarter branch of text analytics.

Folksonomy, the smartest branch of text analytics

Folksonomy is a data organization method that utilizes tags. Users label the content with their own words or categories, allowing them to explore all the tags created with natural language and the numerous associated descriptions.

A significant breakthrough in the analysis of unstructured data is the Large Language Model (LLM). This model utilizes artificial intelligence to analyze large volumes of real-time information. What makes LLM advantageous is its ability to comprehend natural language, making it easier to identify patterns and trends in the data. The combination of LLM and folksonomy can be a powerful tool for analyzing unstructured data in the fields of health and clinical research, as it allows for a deep understanding of the natural language used in these areas. Additionally, automating the creation of the master entity through folksonomy streamlines and enhances the efficiency of data analysis, which is particularly useful in clinical situations that require real-time decision-making. To summarize, the combination of LLM and folksonomy can be a valuable tool for research and clinical practice.

One of the key advantages of folksonomy is its ability to work with unstructured data. Until recently, only structured information could be used for data analysis, which means information that had been prepared for computer processing. However, information in text, audio, etc., formats could not be treated in this manner and required manual processing. The problem is that, according to Gartner, 95% of the value of information lies in unstructured data.

Previously, to analyze this unstructured information, it was necessary to create a master entity that would allow for the classification of information within the text. However, this master entity had to be created manually, which was a laborious and error-prone process. Additionally, it is possible that the entity does not encompass all the valuable information present in the data. In many cases, creating the master entity required more effort than manually analyzing the documents.

An additional advantage of folksonomy is that it does not require a master entity, as it simultaneously analyzes all documents based on weighting rules according to the grammatical category of words, allowing for the automatic identification of the most relevant terms. In this way, the master entity is created automatically. This working approach is known as bottom-up, as opposed to the top-down approach of manual entity creation. The bottom-up approach also allows for data discovery, meaning that relevant terms in the data that were not previously known can be identified and would not have been included in the master entity if it had been created manually. The same applies in reverse: concepts that are not present in the documents will not appear in a master entity created through folksonomy.

The folksonomy is built from the bottom up, meaning that users themselves add tags to the content, rather than having predefined tags that may not fit the actual available content. This allows for a broad view of all available data instead of trying to discover what is there. Additionally, there is no strict hierarchy, making it flexible to use. Although standard folksonomy is a useful tool, it can sometimes present problems and confusion due to the lack of linguistic control. Many people may use different words to describe the same content, or the system may not distinguish between acronyms like "ONCE" and a word with its own meaning and different from the acronyms, such as the number "eleven".

Ambiguity can be a problem, which prevents knowledge extraction from unstructured data.

This is why new intelligent folksonomy systems have emerged to help identify precise information. This new generation of folksonomy leverages technological advancements to provide intelligent tags to your data, making significant progress in solving many problems associated with folksonomy. Despite these changes, folksonomy still maintains its focus on natural and intuitive language.

Folksonomy Text Analytics

Bismart's intelligent folksonomy software addresses many of the problems and confusion caused by the lack of linguistic control in standard folksonomy. It is now possible to accurately identify the information you need, even when you have a large amount of data.

This software allows for the combination of synonyms, differentiation of homonyms, addition of technical or custom dictionaries, and even reduction of tags using a blacklist. Its intelligent algorithms also take into account errors and duplicate content.

It is a user-friendly tool, with options such as a drag-and-drop menu for synonyms, as well as an advanced search engine. Its implementation is quick and easy, and it can be restructured in real-time to adapt to your needs. There are multiple structuring options available to tailor the tool to different requirements.

With this software, you can quickly leverage the knowledge from your unstructured data through a bottom-up approach, without the need for manually creating and defining tags and structures.

Standard folksonomy provides a wealth of valuable information, and Bismart's Folksonomy Intelligence allows you to extract useful insights from this information.

How do we apply Folksonomy Text Analytics in the healthcare field?

In the clinical setting, large volumes of data are generated daily, including medical discharges and histories. This data contains valuable information for healthcare administration and professionals. However, extracting this information manually is impossible due to the volume of

The folksonomy is built from the ground up, meaning that it is the users themselves who add the tags to the content, rather than having predefined tags that may not fit the actual available content. This allows for a broad view of all the available data instead of trying to discover what is there. Additionally, there is no strict hierarchy, making it flexible to use. While the standard folksonomy is a useful tool, it can sometimes present problems and confusion due to the lack of linguistic control. Many people may use different words to describe the same content, or the system may not distinguish between acronyms like "ONCE" and a word with its own meaning and different from the acronym, like the number "eleven".

Ambiguity can be a problem, preventing knowledge extraction from unstructured data.

That is why new intelligent folksonomy systems have emerged to help identify accurate information. This new generation of folksonomy leverages technological advances to provide intelligent tags to your data, which is a significant step forward in resolving many problems associated with folksonomy. Despite these changes, folksonomy still maintains its focus on natural and intuitive language.

Folksonomy Text Analytics

Bismart's intelligent folksonomy software tackles numerous issues and uncertainties caused by the lack of linguistic control in standard folksonomy. It now enables precise identification of the information you need, even when dealing with vast amounts of data.

This software offers the ability to combine synonyms, differentiate homonyms, add technical or customized dictionaries, and even reduce tags using a blacklist. Its intelligent algorithms also take into account errors and duplicate content.

It is a user-friendly tool, with options such as a drag-and-drop menu for synonyms, as well as an advanced search engine. Its implementation is quick and simple, and it can be restructured in real-time to adapt to your needs. There are multiple structuring options available to tailor the tool to different requirements.

With this software, you can quickly harness the knowledge of your unstructured data through a bottom-up approach, without the need for manual creation and definition of tags and structures.

Standard folksonomy provides a wealth of valuable information, and Bismart's Folksonomy intelligence allows you to extract useful insights from that information.

How do we apply Folksonomy Text Analytics in healthcare?

In the clinical field, vast amounts of data are generated daily, including medical admissions and discharges, as well as medical records. These data contain valuable information for healthcare professionals and administration. However, manually extracting this information is impossible due to the sheer volume of data and the fact that it is written in unstructured natural language. To tackle this challenge, the nephrology department at Hospital del Mar in Barcelona, in collaboration with the Ferrer group, partnered with Bismart to implement a text analysis project that would enable efficient information extraction.

The goal of the project was to understand the thousands of medical discharges available and extract relevant clinical knowledge. To achieve this, Bismart provided the hospital with the Folksonomy tool, capable of extracting information from unstructured data in various formats, such as text, image, video and audio.

The nephrology department had generated over 1600 hospital discharge documents in a period of three years. These documents presented the additional challenge that each doctor used different abbreviations for the same tests, diseases, or medications. Therefore, it was necessary to have a tool that could identify these words as synonyms.

The benefits that the Bismart Folksonomy project has provided to the Hospital del Mar and the Ferrer Group include the extraction of knowledge from unstructured information, intelligent recommendations, acceleration in the generation of medical knowledge, and reduction of variability. Specifically, the tool allowed for the identification of synonyms and implications, management of keywords through tags, and classification of certain words and terms into black or white lists, among others.

Furthermore, the tool has improved decision-making that benefits patients and the system, and has facilitated the training of healthcare professionals. This training has been based on three types of big data:

- Descriptive big data: it has allowed the evaluation of health outcomes, the identification of previously unknown relationships, the connection of data sources generated in clinical practice, and the recruitment of patients for clinical trials.

- Predictive big data: it has made it possible to predict clinical events.

- Prescriptive big data: it has facilitated real-time decision-making based on best practices.

Thanks to this tool, healthcare professionals have been able to understand clinical practice and its variability, make informed decisions based on real-time information, determine the population's epidemiology, generate clinical research hypotheses, conduct observational studies, predict clinical cases before they occur, automatically extract patient variables based on search criteria, and establish non-obvious correlations.

Previously, without Folksonomy, discovering this information required a tedious and costly manual process that could take weeks. Doctors had to read and analyze thousands of documents and manually establish relationships between them.

After applying data normalization and quality processes with Bismart's Folksonomy, doctors were able to answer their questions in a few hours. This was possible thanks to the extraction of knowledge from the unstructured data of medical records available in the hospital. For example, the results of objective 1 revealed that 39.91% of patients admitted to nephrology were diabetic, a total of 651, of which 89 were being treated with metformin.

Applications of Artificial Intelligence: Folksonomy

Folksonomy is a powerful tool that can greatly benefit any corporation, and you might already be utilizing it without even realizing it. It offers a unique way to organize data and digital content, where the consumers themselves contribute by adding classes or tags to identify specific content. Users provide a plethora of descriptive information using natural language. You may have come across it being referred to as social bookmarking or social tagging.

Have you recently made use of this tool? Let's see if the first example triggers your memory.

Harnessing the Power of Folksonomy: Social Media

Have you ever used a hashtag on Twitter, Instagram, Facebook, or Pinterest? Then you have already used folksonomy!

All of these sites use hashtags. They make it easy for users to find relevant content; all you have to do is click on a tag to see more content tagged the same way.

Anyone can incorporate any hashtag they want. There are no rules, so you can come across a wide variety of content from "#cute" to "#catsofinstagram".

The first one, "#cute", is an example of a general taxonomy that can be applied to many different types of content. On the other hand, "#catsofinstagram" only refers to one type of content (cat images on Instagram) and is therefore an example of limited taxonomy.

Harnessing Folksonomy: Social Bookmarking

This website is a classic example of folksonomy, although it has disappeared nowadays. It was a social bookmarking page that allowed users to mark interesting websites as favorites and share them.

Each user could tag their highlighted pages with any words they wanted. So, if someone wanted to search for articles on a specific topic, they just had to type in the corresponding tag to get a list of the most recent bookmarks with that tag.

The page also allowed users to add a hot list and a page with the latest articles, making it even easier to find relevant content. It also offered certain general categories, such as "art and design," where users could navigate to find interesting content.

Another similar page, also disappeared, is 43 Things (although the page can still be seen, it's not the same).

Use of Folksonomy: Flickr

Flickr, the community for sharing images, was one of the first to embrace folksonomy with the use of tags. Users upload photos and then add the tags they prefer to describe them. Once the photos are uploaded, other users can also add tags. Additionally, a photo can be tagged with a location.

One feature of Flickr is that it uses these tags to help users find more photos they may like. The page highlights trending tags at the moment and dedicates a section to the most popular tags of all time. With just one click, users can find hundreds or thousands of images they enjoy.

Use of Folksonomy: Major search engines

Although folksonomy offers various functions to help users find interesting information on social networks and Flickr, it can also be used for more serious purposes, such as improving medical services.

Academic folksonomies are a powerful tool for researchers because they can facilitate the organization of large sets of information and simplify the search. Medical researchers use programs like Bibsonomy and CiteULike to generate metadata quickly and economically without compromising quality. They can extract information from both texts and images.

With some of these programs, users can create their own sets and organize them, as well as share them with other users. Just like in Flickr, it's easy to discover more relevant content with little effort.

Another known program was Connotea, but it closed in 2013.

Folksonomy is very useful, but sometimes it can become a bit chaotic. It's easy to feel overwhelmed by the number of tags that users use, which can cause confusion. That's why Bismart has developed intelligent folksonomy software.

This application is easy to use and is designed to facilitate the extraction of knowledge from unstructured data. It allows separating equivalent terms, relating synonyms, reducing tags, and adding custom dictionaries. The intelligent algorithms of this software inspect errors and duplicate content. It works with any type of content and can do everything, from managing web pages to large databases of medical information.

Applications of Artificial Intelligence: Natural Language Understanding

Natural Language Understanding (NLU) is a sub-branch of natural language processing that has gained popularity for its use in analyzing large-scale content. NLU enables the discovery of audiovisual content, whether it comes from structured or unstructured data, in significant volumes.

The technology of voice and speech recognition has evolved tremendously in recent decades. It first emerged in the 1950s with Bell Laboratories' Audrey system, which could understand numbers. This was followed by IBM's Shoebox system, which could process 16 English words. Since then, speech recognition systems have reached a remarkably high level of technological complexity.

New applications of natural language processing

Currently, voice recognition systems are available on all smart devices and have the ability to understand continuous speech, distinguish voices, and comprehend multiple languages and a vast array of words. The applications for this technology have evolved, from its original use in professional and work environments to its integration into everyday entertainment and household activities.

The possibilities offered by voice recognition technology are numerous. It is used in the customer service industry to direct calls and manage large volumes of users. Biometrics are now being introduced in this field to detect voice tones and speaking patterns, allowing for user authentication, prevention of fraud in banking transactions, and identity theft, as well as assisting individuals who may have difficulties with conventional activities.

Recently, home devices incorporating this technology have emerged. Examples include Amazon's Echo, which utilizes Alexa for user communication, Apple's HomePod, and Google's Home. These devices have features that can be activated through voice commands, enabling a multitude of tasks such as ordering a taxi or contacting a primary care physician.

Furthermore, voice recognition technology is growing in the field of research. According to Google Trends (via Search Engine Watch), voice searches increased 38 times from 2008 to 2016.

Dictation

One of the most transformative uses is dictation, which significantly reduces the time spent on text writing and audio transcription. Numerous applications and programs have emerged that rely on this dictation function, such as Dragon Naturally Speaking, Braina, and Sonix.

These programs are highly beneficial for transcribing oral texts, interviews, and other oral and written content that professionals such as journalists or content writers deal with. However, voice structuring offers even more possibilities.

At Bismart, we utilize voice recognition for some of our projects. For example, Folksonomy Text Analytics can work with audio files to find the information you need. This eliminates the need to spend time and resources listening to and transcribing audiovisual documents to extract all the information they may contain. It is especially useful when dealing with a vast amount of documents that would be impossible to process manually.

The Distinction between Folksonomy and Taxonomy

Organizing and tagging data and digital content is a task that can be approached in different ways, with two of the most common methods being folksonomy and taxonomy. Although both techniques aim to solve the same problem, there are significant differences between folksonomy and taxonomy, primarily in their approach.

Taxonomy is a structured and hierarchical way of classifying information based on its similarities. Categories are established by the person who creates or owns the content, and its goal is to facilitate access to the material. It is commonly used in organizing websites and content repositories.

However, taxonomy presents certain challenges. On one hand, it can be costly and time-consuming. Furthermore, the language used may be confusing for end users and may not necessarily reflect their needs. Sometimes, creators do not use clear or effective tagging systems, making it difficult for users to find information.

Folksonomy, on the other hand, relies on tags applied by users who consume the content rather than its creator. Instead of following a pre-established hierarchy, users apply tags they find most useful for organizing information, using the language they prefer.

This approach can be seen on platforms where users can tag content, such as Flickr, where users can apply tags in natural language.

Folksonomy can be a powerful tool when many users tag the same piece of information. Companies can leverage this information to improve content structuring and help users find what they are looking for. Additionally, it is flexible and user-friendly.

Despite solving some of taxonomy's issues, folksonomy also has its disadvantages.

One of them is the lack of organization, as its operation can become chaotic. For example, different people may tag the same color in different ways: one as "teal," another as "turquoise," and another as simply "blue" or "green." This can result in too many different tags for a single piece of content.

Furthermore, these tags can be ambiguous due to the lack of strict standards to follow.

Another issue is the presence of abbreviations and acronyms, which can create confusion with similar topics or words. For example, folksonomy might struggle to differentiate whether "ONCE" refers to the Organización Nacional de Ciegos Españoles (National Organization of Spanish Blind People) or the number that follows ten. It can also have difficulty with synonyms or technical terms.

Intelligent Folksonomy

While standard folksonomy is a useful tool, its lack of linguistic control leads to numerous problems and confusion. This means that extracting new perspectives and knowledge from unstructured data is not always possible. That's why we have developed intelligent folksonomy to help you find the precise information you need.

These cutting-edge tagging systems utilize the latest technological advancements to provide intelligent labels for your data, automating the creation and definition of tags and structures.

This significant advancement in solving the problems of folksonomy allows for the preservation of its natural and intuitive language.

An example of such systems is our Folksonomy Text Analytics software, which is built on intelligent folksonomy. This powerful software allows for the identification of synonyms, differentiation of homonyms, and the addition of technical or custom dictionaries tailored to your specific needs. It also offers the capability to reduce tags using a blacklist and its intelligent algorithms take into account errors and duplicate content.

Artificial Intelligence in the Hotel Industry

It is undeniable that artificial intelligence is causing a revolution in the current world. Undoubtedly, the year 2023 will be remembered as the year when ChatGPT was introduced, a machine learning and deep learning model that has brought artificial intelligence to the forefront of public opinion.

The reality is that artificial intelligence is transforming the way businesses operate and generating new ways of doing business. According to Forbes, ChatGPT has become the fastest-adopted enterprise technology in history.

One of the most significant advantages of AI is its wide range of applications, making it useful for all types of businesses. Each industry, of course, will apply artificial intelligence differently based on its activities, although there are practical cases where AI is beneficial for any sector.

One of the sectors that can make the most of artificial intelligence is the service sector, particularly the hotel industry. Although many hotel chains are already using AI, even unknowingly, this sector has the potential to harness it even further by exploring areas that have not been sufficiently explored. Artificial intelligence is an abstract technology with a wide range of potential applications, which can make it challenging to understand its scope. Nevertheless, it is one of the most promising technologies of the last century.

In today's highly competitive business environment, hotel companies need to stay one step ahead of the competition to avoid losing market share. Investing in innovative technologies like artificial intelligence can even become an added value to the service a hotel offers to its customers.

Next, we will explore some of the possible applications of artificial intelligence in the hotel industry.

-

Occupancy Prediction: Predictive analysis, a key branch of artificial intelligence, is utilized by hotel chains to forecast occupancy during specific periods. This capability aids in anticipating staffing needs, resource requirements, and supply demands, as well as predicting service demand and sales points.

-

Operations and maintenance management: Artificial intelligence plays a crucial role in the overall management of hotels, spanning various areas such as staff scheduling, energy usage monitoring, and predictive maintenance. These applications enhance efficiency and service quality, prevent management errors, and drive cost reduction.

-

Dynamic pricing strategy: Predictive analytics enables the implementation of a dynamic pricing strategy based on competitor behavior and projected demand. It also aids in predicting inflation and the price of consumer goods, optimizing the hotel's procurement processes.

-

Customer service chatbots: AI-powered chatbots enhance guest experiences by providing quick and convenient assistance with reservations, payments, and post-stay support. This not only fosters customer loyalty but also increases satisfaction levels.

It is important to note that chatbots do not replace human attention in the hotel industry, but rather enhance the guest experience by providing 24/7 assistance and handling common requests. This allows the staff to focus on providing personalized attention and service.

Sentiment Analysis: Natural Language Processing (NLP) is a prominent application of artificial intelligence that enables sentiment analysis. By analyzing textual content such as online reviews, AI algorithms can determine whether the expressed emotions are positive, negative, or neutral.

Sentiment analysis has multiple applications, including evaluating customer opinions on social media, monitoring brand reputation, and detecting trends in public opinion.

- Personalizing the customer experience: Artificial intelligence (AI) has the power to enhance guests' hotel experience through personalization. This is achieved by providing tailored recommendations for each customer and utilizing technologies such as voice and facial recognition.

Tailored recommendations: AI analyzes guest data, including room preferences, past activities, previous bookings, and social media opinions, to offer personalized recommendations. For example, suggesting nearby restaurants that cater to guests' dietary preferences or recommending activities based on their interests. Personalizing the customer experience is crucial for guest loyalty and standing out from the competition. Increasingly, guest satisfaction is based on intangible aspects related to the experience they are provided.

Voice recognition and facial recognition: AI utilizes voice and facial recognition to personalize the guests' experience. For instance, a facial recognition system can detect when a guest arrives and tailor the welcome screen with relevant information. Additionally, voice recognition allows guests to interact with the hotel in a natural and personalized manner.

These are just a few examples of how artificial intelligence is revolutionizing the hotel industry. Its versatility and potential for improvement continue to expand, providing opportunities to optimize management and offer more efficient and personalized services to guests.

Hotel chains are increasingly adopting artificial intelligence (AI) as a tool to improve both operational efficiency and guest experiences. By harnessing the power of AI, these chains can optimize various internal aspects such as inventory management and staff scheduling, saving time and reducing costs in their processes.

Moreover, AI offers the ability to personalize the guest experience by providing tailored recommendations, utilizing AI-powered chatbots, and incorporating technologies like facial and voice recognition. By implementing these AI-based solutions, hotel chains can enhance customer satisfaction and foster guest loyalty, ultimately contributing to overall business success.

With the continuous advancement of AI technology, it is expected that hotel chains will continue to embrace these solutions and seize new opportunities to further enhance their operations and deliver increasingly personalized and satisfying guest experiences.

How can artificial intelligence improve hotel occupancy rates?

Artificial Intelligence (AI) is an integral part of the business culture and has made a significant impact across various industries. From more accurate data analysis to the implementation of machine learning solutions, AI has greatly improved the efficiency and profitability of many global companies.

The hotel industry is no exception to this trend. More and more hotels are embracing AI-based technologies to automate their day-to-day operations. AI can be utilized in numerous ways by hotel chains, but in this particular case, we will focus on the use of predictive analysis and machine learning to optimize hotel occupancy rates.

1. Machine learning to improve customer experience

To improve the customer experience in the hotel industry, predictive analytics and machine learning are key technologies. These technologies can help you personalize the experience of each guest who visits your hotel. Customers today expect to receive personalized treatment based on their personal preferences, needs and expectations, and working with Big Data makes this possible.

Predictive analytics relies heavily on historical data to predict future customer behavior. In this way, patterns in a customer's past behavior can be detected and provide them with the best room, services and personalized offers. In some cases, predictive analytics can even anticipate customer needs before the customer knows it. It is critical to analyze how certain types of guests behave after check-in and adjust services accordingly for future customers.

2. Adaptive Pricing Strategies

AI can also assist in implementing adaptive pricing strategies in the hotel industry. Many customers seek affordable rooms and services, but this may not always be the most profitable option for the company. By utilizing data analysis, a better understanding can be gained of the price expectations for different types of rooms and services.

Consulting with a data analysis expert is recommended to optimize the current pricing strategy. Subsequently, it is crucial to gather and analyze information on customer response to these prices. It is important to note that adaptive pricing strategies are directly linked to market fluctuations and customer expectations, therefore requiring regular updates and adjustments.

3. Improving customer service

It is essential to create personalized experiences for customers, and customer service is a key aspect of this. With the assistance of artificial intelligence, we can significantly enhance customer service and provide better service to our future customers.

4. Elevating marketing

In addition to customer service, marketing is also crucial. Fortunately, predictive analytics and artificial intelligence are the perfect technologies to take our marketing strategy to the next level. Any effective marketing strategy must be based on data collection and analysis, as information about our customers, products and services, and the market is crucial for carrying out more effective marketing actions.

5. Occupancy and Demand Forecasting

Lastly, but certainly not least, we can utilize data analysis to predict our hotel's occupancy rate and demand. There are various artificial intelligence-based tools that allow us to forecast how many rooms will be occupied during a specific period, which, in turn, helps us anticipate the amount of staff we will need, necessary supplies, and more. Additionally, these technologies can also prevent management errors.

Predictive analysis also enables us to anticipate the overall demand for our services. By understanding the approximate demand rate, we can better cater to our customers, optimize resources, and increase profitability per guest.

Let's talk about bots.